Previously, I calculated the normal of each vertex in order to do some shading. The result was quite nice, but the detail wasn’t great and made the mountains look rounded. Normal mapping is a technique whereby you create a texture which contains normal information at texture detail (as opposed to at vertex detail) and use this when calculate lighting for a pixel. It means much higher shading detail but without having to increase the amount of points in the model. Both of these projects use it so I thought I’d give it a lash.

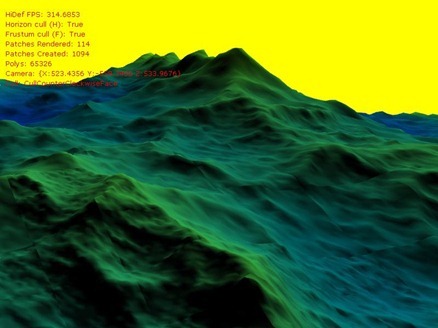

As with all these graphical things, the first go was pretty rewarding, but getting it (almost) right was very tough work! Here’s the first pic, you can see the shading is going to be much more detailed than before:

Basically there are 3 main things involved here; 1) lighting and shading, 2) creating the normal map from a height map, and 3) calculating the normals, binormals and tangents to work out the lighting. For 1) I took a HLSL sample of bump mapping from rbwhitaker's bump map tutorial but 2) and 3) are a little more complicated. Let’s talk about 3), binormals and tangents, first.

A normal is a line going straight up from the surface, and calculating it for a sphere is easy enough, just normalise the vector from the centre to the point. Binormals and tangents aren’t actually that complicated, but I found a few online articles that make them seem so, I’ll try to simplify here. They are both lines tangential to the sphere (so should really be called tangent & bitangent) but at 90 degrees to each other. It’s easier to visualise them as if they are the north and east arrows on a map; the binormal says which way is north, and the tangent says which way is east.

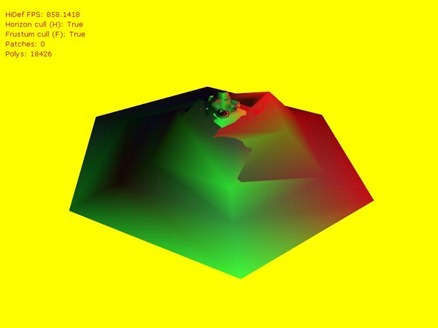

Of course on a sphere you can calculate them as if they were north & east, but the poles get a little complicated because at the top of the north pole, nowhere is north and nowhere is east – any direction is south! Applying this to my planet resulted in some male pattern baldness at the poles:

This image shows the normals (pink), binormals (blue) and tangents (red), you can see how at the pole they become a dot and the lighting is wrong.

So how to fix this? Luckily, the planet is actually made from 6 subdivided faces of a cube so each face can have it’s own north and east without ever having a north pole. For example, the face on the top of the cube has its north (binormal) pointing in the z-axis and its east (tangent) pointing in the x-axis and no pole pinching. So code to calculate the normal, binormal and tangent would look something like this for any point on any face:

Vector3 normal = Vector3.Normalize(point);

Vector3 tangent = Vector3.Normalize(pointToTheLeft - pointToTheRight);

Vector3 binormal = Vector3.Normalize(pointAbove - pointBelow);

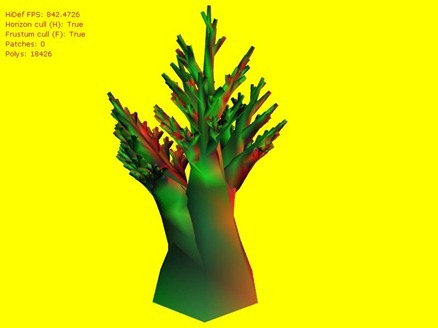

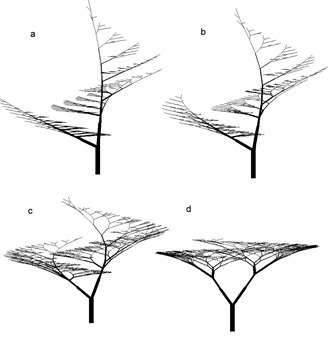

At the edges there’s a problem e.g. there’s no point above the top, but this can be resolved with skirting – I’ll post about that next time because I haven’t gotten it right yet… although that’s never stopped me before!! Also for non-spherical models, the normal would be worked out in relation to its neighbours, but this simple example will do for now. Here’s what this looks like with a standard normal map (a bump), there are multiple lines in most places because each face and each sub-patch calculate their own normals, binormals and tangents for shared points:

Ok so that’s binormals and tangents, now how to work out the normal map dynamically given a height map? Luckily for me there’s a thing called a Sobel filter which is a little equation that works out what the normal value should be for any point. To simplify it a bit, to work out the normal of a pixel, it gets the y-magnitude (binormal) by looking at the heights above and below and gets the x-magnitude (tangent) by looking at the heights to the left and right. Even more luckily for me (and my shader phobia) Catalin Zina blogged some HLSL code that implements the Sobel filter. Unfortunately it’s not that easy, when I tried it out I got some strange results:

Each face on its own looks ok but they obviously differ at the borders … what’s going on?? This problem was a real headwrecker, and it just highlights that you can’t always plug things in from various sources & expect them to work without having a proper understanding of what’s going on. A hint is that the normal map created by Catalin’s code as shown in the first image of this post is generally green while most other examples of normal maps are generally purple. It boils down to one place where the dx and dy components are put in the red and blue channels whereas usually they are put in the red and green channels of the pixel:

float4 N = float4(normalize(float3(dX, 1.0f / normalStrength, dY)), 1.0f);

This is absolutely fine if when you calculate the lighting you use the same channels for x & y but breaks down when you use lighting that expects x and y to be in different channels.

I changed this to use the more conventional channels and added in a level parameter to halve the normalStrength when calculating a normal map for a subdivided patch (it’s the same area image but half the normal intensity). Here’s the full normal map creation shader I used, adapted from Catalin’s:

// copied from here http://www.catalinzima.com/tutorials/4-uses-of-vtf/terrain-morphing/

float normalStrength = 0.5;

float texel = 1; // calculate as 1f / textureSideLength

int level = 1; // the patch subdivision level

float4 PSNormal(vertexOutput IN): COLOR

{

float tl = abs(tex2D(heightMapSampler, IN.texcoord.xy + texelWidth * float2(-1.0, -1.0)).x); // top left

float l = abs(tex2D(heightMapSampler, IN.texcoord.xy + texelWidth * float2(-1.0, 0.0)).x); // left

float bl = abs(tex2D(heightMapSampler, IN.texcoord.xy + texelWidth * float2(-1.0, 1.0)).x); // bottom left

float t = abs(tex2D(heightMapSampler, IN.texcoord.xy + texelWidth * float2( 0.0, -1.0)).x); // top

float b = abs(tex2D(heightMapSampler, IN.texcoord.xy + texelWidth * float2( 0.0, 1.0)).x); // bottom

float tr = abs(tex2D(heightMapSampler, IN.texcoord.xy + texelWidth * float2( 1.0, -1.0)).x); // top right

float r = abs(tex2D(heightMapSampler, IN.texcoord.xy + texelWidth * float2( 1.0, 0.0)).x); // right

float br = abs(tex2D(heightMapSampler, IN.texcoord.xy + texelWidth * float2( 1.0, 1.0)).x); // bottom right

// Compute dx using Sobel:

// -1 0 1

// -2 0 2

// -1 0 1

float dX = tr + 2.0*r + br -tl - 2.0*l - bl;

// Compute dy using Sobel:

// -1 -2 -1

// 0 0 0

// 1 2 1

float dY = bl + 2.0*b + br -tl - 2.0*t - tr;

float4 N = float4(normalize(float3(dX, dY, 1.0 / (normalStrength * (1 + pow(2, level))))), 1.0);

// was float4 N = float4(normalize(float3(dX, 1.0f / normalStrength, dY)), 1.0f);

return N * 0.5 + 0.5;

}

This gives some purdy results, but there’s still more to do (you can see a feint dividing line down the centre of the first image because normals aren’t calculated correctly for patch edges so I’ll need to skirt around a patch to get the values past the borders):

FPS and video memory are getting hit hard so I’ll clean up the code & make sure I’m not doing anything I don’t need to. Next I’ll do skirting so I can get rid of those feint but pesky dividing lines.